In under three years, generative AI has gone from a curiosity to a central part of board discussions. And if you think that’s fast, just wait for the next three years.

But if the opportunities are enormous, so are the perils of getting it wrong—a worry that’s growing as so many companies rush headlong into development and deployment. The key to winning with AI, as everyone reading this likely knows, is to nail the balance and build the right governance structures now so that companies can move forward with confidence.

To help, Corporate Board Member pulled together a distinguished panel of technology and governance experts on the sidelines of the of the third annual Digital Trust Summit in Washington, D.C., to provide some practical insight and expertise to help you navigate the complex AI landscape. On hand: Dominique Shelton Leipzig, CEO of Global Data Innovation and founder of the Digital Trust Summit; Siobhan Mc Feeney, former Kohl’s chief technology officer; Will Lee, CEO of Adweek; Bhawna Singh, CTO of Okta; Dr. Christopher DiCarlo, philosopher and ethicist and author of Building a God: The Ethics of Artificial Intelligence, and Christine Heckart, founder & CEO of Xapa and a board member at SiTime and Contentful.

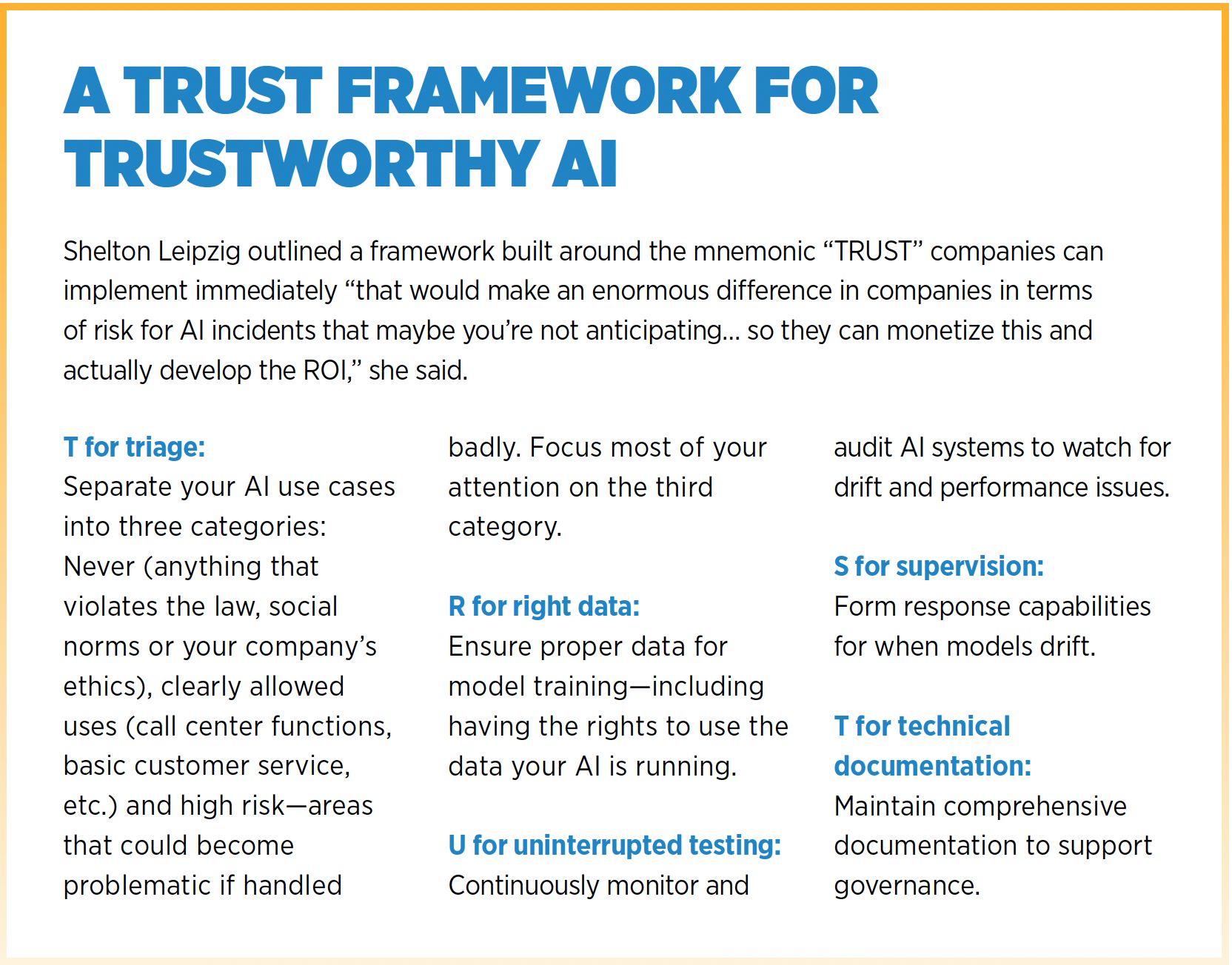

“In the next 15 to 18 months, the AI use cases being piloted are going to explode,” said Shelton Leipzig, who has worked with boards globally to try and build the right governance structures to mitigate AI risk. “Our opportunity to ensure that our values, our mission, our purpose as a company are embedded into the AI—that opportunity window is closing rapidly. Waiting has not served us well with technology. We have to get ahead of this and control and prioritize.”

“We’re at a point in history where we’re in between the way everything used to be done and how everything is going to be done,” said DiCarlo. “This is a very unique point that we’re living in right now. To echo Dominique, we better get it right. We might only have one shot to get this right, so let’s do that now.”

Still, said Singh, it is a hopeful moment, if only because it has not passed. “All of us are now moving into a profit or an outcome phase. Our leaders are listening, they are looking for information. They are looking for data. They are looking for guidance. We are in that phase, so this is a good time to actually get the word out, get the information out. Because they are absolutely ready to listen because now they are pushing stuff into production.”

LEGAL RISKS

As AI systems become more integrated into business operations, the panel highlighted growing considerations for companies that fail to establish appropriate governance. While the regulatory landscape is still evolving, other mechanisms are already creating accountability—including reputational concerns—but legal liability concerns as well.

“I’ve spoken to CEOs—big agencies, small agencies—they all have a lot of really good ideas about how to optimize business with AI,” said Lee. “I would say that 99 out of 100 of them don’t have any idea about the governance and putting guardrails around it. They’re like, ‘Oh, yeah, that’s the CTO’s job. That’s the CIO’s job, right?'”

Companies developing AI systems may be held accountable for their impacts in ways similar to other products in the marketplace, perhaps in ways we did not see with the rise of social media, thanks to legal protections stemming from the earliest days of the commercial internet, protections that don’t exist for AI. “If you are building products being used by consumers, just like any other thing,” said Singh, it is likely that “you are also responsible to the negative implication or the impact of it.”

Shelton Leipzig pointed to litigation where, according to lawsuits against one AI company, kids allegedly got over-attached to their AI, and suicides resulted.

The example demonstrates that responsibility ultimately rests with company leadership, not with the technology itself. With one retailer, Shelton Leipzig explained, their vendor’s AI was misidentifying paying customers as criminals. The CIO and the CTO and the vendor are nowhere to be found in the investigation by the government or in the lawsuits that ensued or the shareholder derivative actions, or the bankruptcy protection that followed. “Because the reality is when an AI incident occurs, it’s not the CTO, it’s not the CIO that’s testifying in Congress or having to answer for it.”

Singh said she hopes CEO and board attention will grow fast now that AI is entering a more mature, customer-facing era. “When you land in a place where you’re making money with something, all the other aspects of governance, compliance, all those land there right away because my leader has eyes on it, security has eyes on it, your regular rhythm has eyes on it.”

Getting Up to Speed

All of which requires, panelists agreed, a new approach to corporate oversight. Like cybersecurity, it is a complex and fast-changing technology challenge that could tank a company’s reputation quickly if problems arise. But unlike cyber, AI will likely impact—and penetrate—many parts of the business simultaneously. Rather than treating AI as a compliance matter, it’s far more important to think wholistically about how it will impact many parts of the business, from productivity to culture, brand to business model.

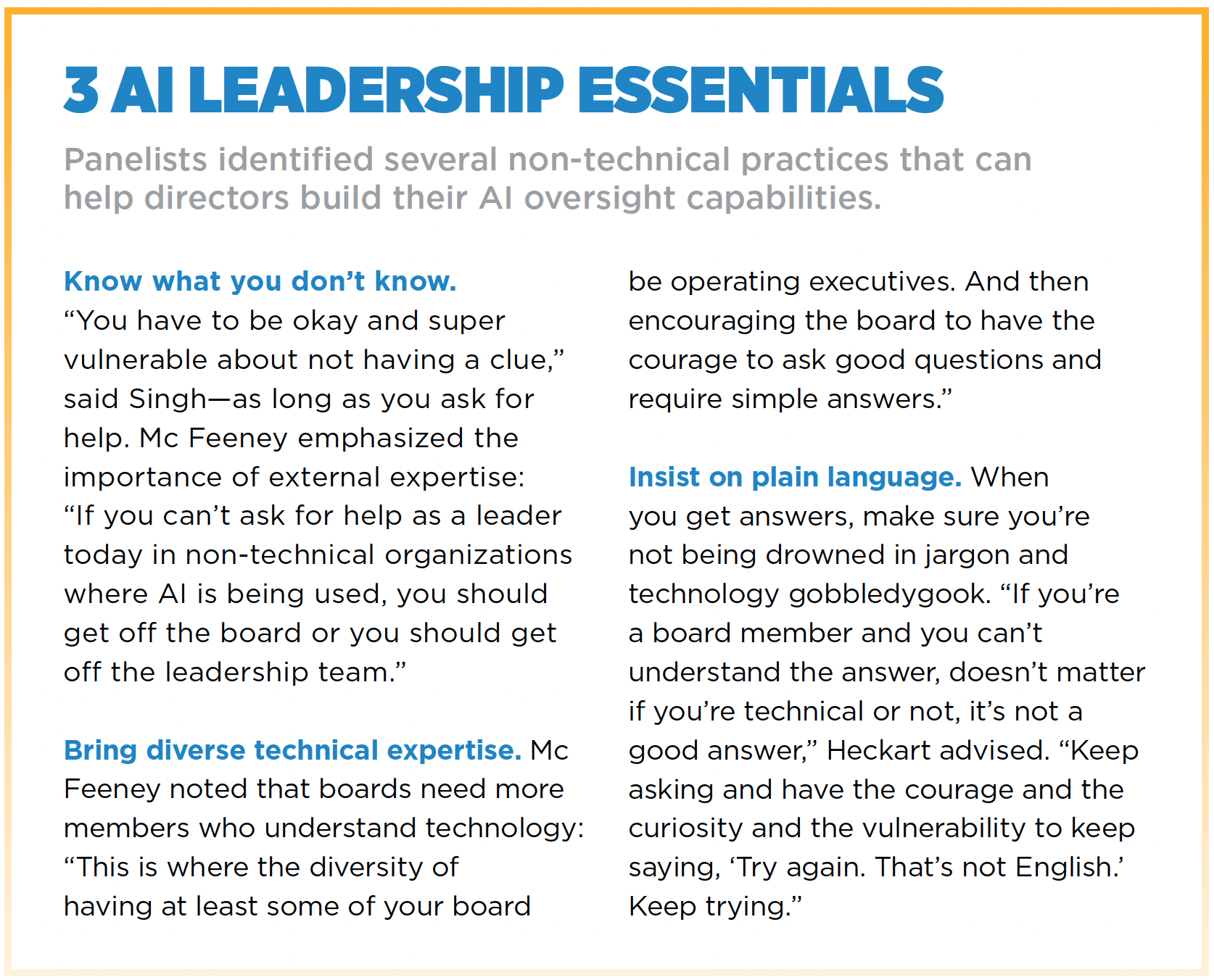

The challenges of doing so, said Mc Feeney, are particularly acute for traditional enterprises who may not be Sand Hill Road regulars. “Most industries, they’re not technology companies,” she said. “They’re not Okta and Salesforce and Google. They’re retailers, and they’re manufacturing companies. And they’re not at the same pace, and they do have really thoughtful boards who don’t even play with AI themselves and who are trying to learn.”

That learning can be tricky, they all acknowledged, with an industrywide shortage of practical training for directors and senior leaders in the ins and outs of artificial intelligence. Directors—especially those without a deep, technical background in artificial intelligence—would be well-served to make time to use some of the commercially available products like ChatGPT and Claude for hands-on experimentation with non-critical public information, just to see the capabilities.

“What boards lack is the right questions to ask and the ability, when they get answers, to make any sense out of the answers,” said Heckart. “It’s too complex to be meaningful to most of the board members. The average public board is not made up of a bunch of technologists. It’s made up of a bunch of people from industry who do not understand at all what is technically happening, and therefore, the associated risk and how the leg bone is connected to the ear bone. Because that’s what’s happening here. There’s so much connectivity that’s unseen and misunderstood or not understood.”

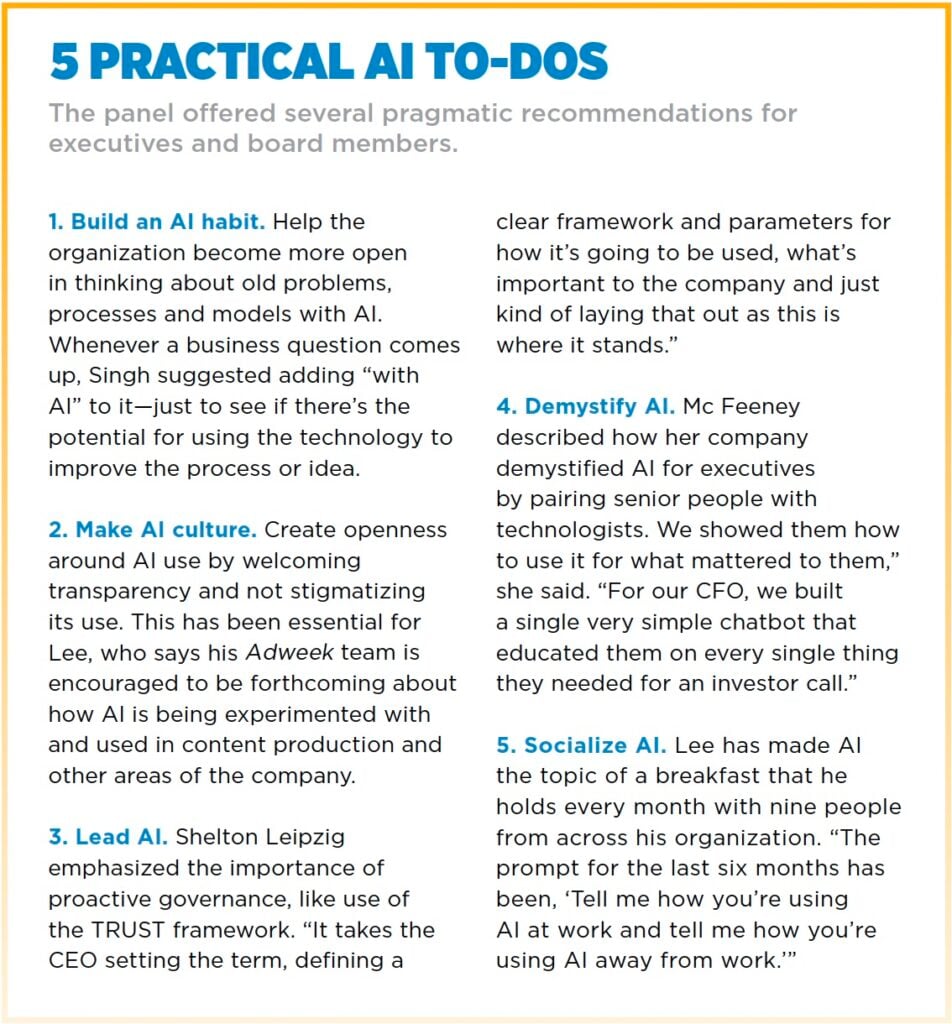

Mc Feeney took on the challenge of AI familiarization with the board and senior leadership with simple “games”—like asking board members to take a photo of what was in their fridge and asking ChatGPT what they could make for dinner from the contents. “Make it so accessible so it’s not terrifying. So if they can touch it and use it, suddenly there’s a conversation around it.

“I certainly have found, a lot of people, candidly, they’re embarrassed. They don’t really understand it,” said Mc Feeney. “We paired senior people up, and we showed them how to use it [in ways] that mattered to them. For our CFO, we built a single very simple chatbot that educated them on every single thing they needed for an investor call, enabled them to do it themselves.”

AI facility is also, Lee said, table stakes for leaders who wish to thrive in the years to come, even if too many of his peers have not found the time to do so. His advice: Make sure you’re making time to play with it, if even to avoid the trapdoor of eventually having a staff that is far more AI literate than you are. “That creates a lot of tension. It creates suspicion. It creates a lot of unintended consequences.”

At Adweek, Lee has made AI the central topic of conversation at a monthly lunch he holds with people throughout the organization, asking them simply what they’re trying out with AI. He’s also been transparent about his own use of the technology and is working to remove the stigma many creative people have about publicly acknowledging their use of generative AI as a tool. Hiding the use of AI will only increase the risks.

“We’re a business publication so there is a fair amount of analysis and data, number crunching that we do. Let’s just be transparent. Then also, don’t misrepresent. I told the staff, ‘When you’re coming up with ideas or you’re using Claude as a copywriter partner, just be transparent about it. Say, ‘So I ran this through Claude.'” Because then it’ll just be part of our workflow, and we don’t have to apologize for it.”

Building Culture

That kind of culture building is essential right now if you want to fulfill the promise of what AI can become because, as Heckart reminded everyone, for all of its promise and ability, generative AI is still just a machine, and we’re a far way off from it becoming anything like sentient. The key to getting the most out of AI is—and will remain—helping the humans who work with it.

This requires organizations to reconsider leadership development at all levels, especially in a time where powerful AI will be a part of everyday work in nearly every industry—and is fast becoming even more so. “Just having leadership concentrated at the top is an archaic model,” she said. “If you graduate college now and you’re lucky enough to be hired somewhere—because there aren’t as many entry-level jobs—the day you walk in the door, you’re a manager. You’re a manager of AI agents.”

Her biggest advice to leaders right now: “Pay attention to your people because businesses for a long time are still going to need humans, and the humans can be better humans with AI, or they can be worse humans,” she said. “The mediocrity can scale, or you can use AI to uplift. But it all starts with the quality of the humans and their EQ and their leadership skills and their critical thinking skills.”

As DiCarlo said, “We’re kind of at our dot-com moment, aren’t we? We’re at that point where everybody knows it’s big, but they’re not quite sure of how it’s going to be integrated and implemented.” His bet: It will fundamentally change society on a scale few in business can comprehend.

“This is going to shape and change the way societies function,” he said. “It’s difficult for me to say calmly how big this is and how big this is going to be and how drastically this is going to affect everyone’s lives. So it’s cool that we’re sitting and we’re thinking about this now because that’s exactly what we need to be doing.”