AI is now a major boardroom topic, promising significant benefits but also introducing new risks, with an unprecedented ability to scale results—in both directions. The timeline is striking: Chat GPT-4 put AI on the boardroom map in 2023, followed by two years of pilots and proof-of-concepts. Now, in 2026, it’s embedded in production across most industries, and at last month’s Davos gathering, tech leaders emphasized that we’re entering a new phase: agentic AI systems that don’t just assist but act autonomously. Anthropic CEO Dario Amodei warned that the next few years will be critical for how we regulate and govern these systems before they evolve beyond human control.

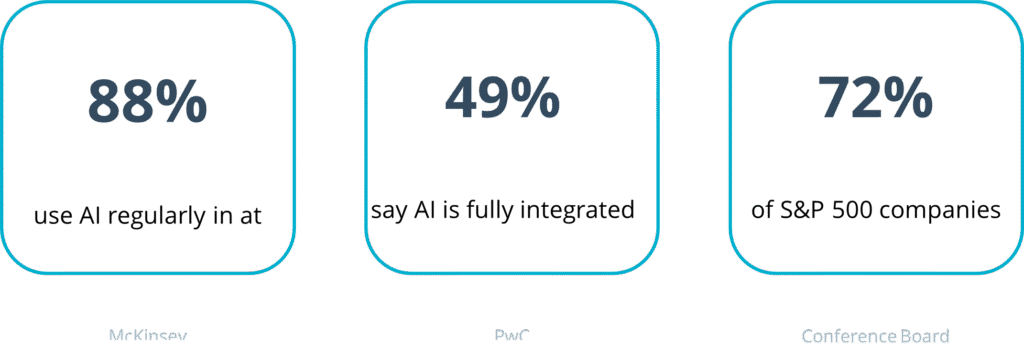

Boards are approving deployments faster than oversight is scaling: 88 percent of companies use AI regularly in at least one business function, and it’s becoming fundamental to operations. Every use case requires governance. Boards are still catching up not just to what AI can do, but to what it can cost them when it goes wrong. Massachusetts fined a lender $2.5 million for unlawful AI practices. Deloitte refunded the Australian government for AI-generated errors. Cigna faces class-action litigation over algorithms that denied more than 300,000 Medicare claims.

And it’s broader than the headlines: EY’s Responsible AI survey found 99 percent of organizations reported AI-related financial losses, with 64 percent exceeding $1 million. Most boards can’t answer essential audit questions about how their AI systems make decisions; when regulators ask to see how a model reached its decision, the evidence trail is almost never crisp. An indication of the scope of the risk is that 72 percent of S&P 500 companies now flag AI as a material risk in their financial disclosures.

The question is, why are audit trails so elusive for AI? Having helped IBM navigate AI governance as Deputy Chief Auditor and served on their AI Ethics Board evaluating client use cases, I’ve watched this regulatory gap grow wider, all while boards feel competitive pressure to approve deployments faster.

The Audit Evidence Gap

Here’s the core problem: Traditional audits assume you can retrace a decision. With AI, that’s often impossible. AI models make thousands of decisions per day. Some have human sign-offs for key calls, but the rigor of those reviews varies wildly—and often, the human reviewer is simply confirming what the algorithm already decided. The logic behind AI’s choices lives in statistical patterns across millions of data points, patterns that even the model’s own developers struggle to articulate. And unlike traditional processes, AI can produce different outputs on each run, even when it’s working exactly as designed.

That’s what earns AI the “black box” label: You can see what went in and what came out, but the reasoning in between stays hidden. For audit teams, that means a fundamental shift—from asking “was the process followed correctly?” to “is this output within the range of acceptable”?

The Agentic Inflection Point

The governance challenge is about to intensify. The AI systems that boards approved last year were assistive, recommending actions that humans executed. The systems being deployed now are agentic, acting autonomously. At Davos last month, leaders grappled with AI that operates at what cybersecurity experts call “machine speed”: autonomous agents that execute decisions, trigger workflows and interact with other systems without waiting on human approval.

These aren’t hypothetical concerns: Salesforce demonstrated autonomous agents booking meetings and managing schedules for conference attendees, and companies are deploying agents in IT operations, customer service and procurement. That further widens the governance gap because traditional quarterly audits can’t keep pace with systems that evolve daily and make thousands of autonomous decisions. Boards need real-time monitoring instead of the traditional periodic reviews.

What Regulators Are Demanding

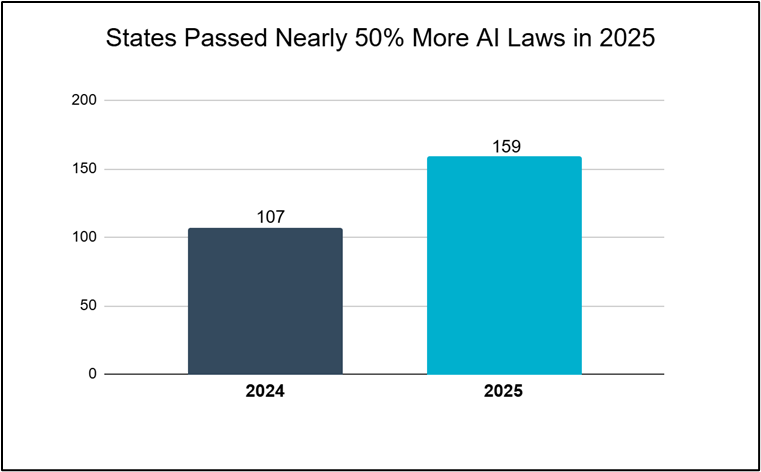

Regulators have noticed the new challenge from AI—and they’re responding. U.S. states enacted 159 AI laws in 2025, up from 107 in 2024. The EU AI Act begins enforcing high-risk system requirements starting August 2, 2026 and the FTC issued compulsory orders to seven different companies demanding comprehensive AI audit documentation. With this kind of aggressive outreach and activity, audit committees should be prepared for regulators to ask:

- Can you identify all your AI systems in production, with risk classifications?

- How do you validate model accuracy and fairness?

- What’s your process to handle model failure or when it produces biased outcomes?

- Who is accountable when AI makes the wrong decision?

- How do you ensure AI decisions comply with existing regulations like fair lending or anti-discrimination laws?

The emerging picture is one where regulators want the same rigor for AI that exists for financial controls. The good news? We’ve been here before. Sarbanes-Oxley felt daunting at first, and now it’s just part of how companies operate. The bad news? You have less time this round. Sarbanes-Oxley gave companies years to comply; the EU AI Act gives you months, and the FTC is issuing orders now. AI governance will follow the same arc, but right now the standards are still being written, and that’s both a challenge and an opportunity.

The Audit Committee’s Oversight Mandate

In most organizations, AI governance falls to the audit committee, sitting at the intersection of risk, compliance, controls and financial reporting. But this isn’t solely an audit committee mandate.

The Board’s Dual Mandate: Risk Mitigation and Value Creation

AI governance is about more than avoiding fines; it’s about unlocking long-term competitive advantage. Companies with mature governance frameworks can move from pilot to production faster because risk assessment processes and approval workflows are already in place. The board oversees both dimensions:

For the Audit Committee:

- AI inventory & risk classification: Demand visibility into every AI system in production, including shadow AI deployed through third-party tools and browser copilots. Require management to assign clear risk tiers and decision authorities to each application.

- Governance frameworks: Extend existing change control and testing processes to cover AI systems, treating them as part of the broader control environment. Ensure cross-functional accountability with CEO and CFO sponsorship, recognizing that AI rarely stays within one business function.

- Real-time assurance: Push beyond quarterly audits to continuous monitoring systems that catch algorithmic drift and bias as it happens. Require that results report directly to the audit committee, enabling intervention before small issues become regulatory problems.

For the Full Board:

- Strategic AI portfolio: Review AI investments alongside M&A and R&D decisions, applying the same rigor. Demand clear ROI hurdles, risk-adjusted returns, and kill criteria for underperforming AI initiatives.

- Trust & velocity metrics: Track whether AI governance is accelerating or constraining the business through customer trust scores, partner confidence indicators, and deployment velocity compared to industry benchmarks. Recognize that strong governance builds trust that enables deals competitors can’t close.

- Agentic readiness: Ask which systems are moving from assistive to autonomous, what guardrails exist for autonomous decision-making, and whether the organization can demonstrate control when regulators or customers ask. Position the board ahead of the next wave of AI governance challenges.

The Window Is Closing

The pace isn’t slowing. Agentic AI is already here, and companies racing ahead without governance are putting stakeholders at real risk. Multi-million-dollar settlements and FTC enforcement signal we’re at an inflection point for AI oversight. Just like the early days of Sarbanes-Oxley compliance, early adopters will fare better by building governance into culture before regulations force rushed, costly implementations. Boards who act now will avoid scrambling when federal regulations finalize, a new state law is passed or a customer complains to the FTC.

Having built audit programs and governance frameworks at Fortune 50 scale, I’ve witnessed early investment in governance infrastructure paying dividends when regulatory scrutiny arrives. The boards that lead on AI governance will discover something unexpected: Mature governance isn’t a constraint on innovation—it’s an accelerator. When you can demonstrate robust AI governance to customers, partners and regulators, you close deals that competitors can’t. It’s not a burden; it’s the seatbelt that lets you go faster.